ARTIFICIAL Intelligence is here and here to stay. It is producing both exciting, unimaginable opportunities in healthcare and cyber security, on the one hand, as well as opportunities for misuse, mayhem, and cybercrime on the other.

In the cyber security world, on the positive side of the ledger, AI is being used for threat detection and response, intrusion detection and prevention, malware detection and analysis, vulnerability management, user behaviour analytics, and security automation and orchestration. On the negative side, AI is being used to streamline criminals' operations, making them more efficient, sophisticated, and scalable, while allowing them to evade detection.

For example, exploiting ChatGPT’s popularity, threat actors have created a copycat hacker tool, named FraudGPT, to facilitate malicious activities. FraudGPT” is an AI bot designed for offensive cybercrime activities, available on Dark Web markets and Telegram.

A skilled cybercriminal can easily craft emails to successfully target, then lure recipients and make them click on malicious links. FraudGPT is available on a subscription basis, with pricing ranging from $200 per month to $1,700 per year.

Another AI driven cybercrime tool was recently discovered, named WormGPT. The WormGPT project aims to be a cybercrime alternative to ChatGPT, "one that lets you do all sorts of illegal stuff and easily sell it online in the future.”

According to zdnet.com, WormGPT is described as "similar to ChatGPT but has no ethical boundaries or limitations." ChatGPT has a set of rules in place to try and stop users from abusing the chatbot unethically.

This includes refusing to complete tasks related to criminality and malware. However, users are constantly finding ways to circumvent these limitations with the right prompts. WormGPT can "generate an email intended to pressure an unsuspecting account manager into paying a fraudulent invoice." The team at zdnet.com was surprised at how well the language model managed the task, branding the result "remarkably persuasive [and] also strategically cunning."

Cybercriminals are using AI tools to orchestrate highly targeted phishing campaigns. They use AI to analyse enormous amounts of information, including leaked data, to identify vulnerabilities or high-value targets for more precise and effective attacks.

AI is also employed to assist with the scale and effectiveness of social engineering attacks, learning to spot patterns in behaviour to convince people that a communication is legitimate, then persuading them to compromise networks and hand over sensitive data.

It is likely we have all heard of deep fakes. Cyber criminals are using AI to create fake images, audio, and videos. From high-school students using AI to create fake nude pictures of other students and teachers, to a finance worker who recently was convinced to pay out $40m after a deep fake video call with his CFO and team, AI software is being used to alter reality.

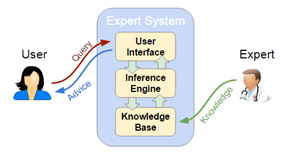

In healthcare, and back to the positive side of AI, it is being used to assist in diagnosing diseases by analysing patient data and symptoms. An AI medical expert system designed to diagnose bacterial infections might ask questions such as:

• What is the patient’s temperature?

• What is the patient’s blood pressure?

• Does the patient have a cough?

• Does the patient have a sore throat?

• Does the patient have a runny nose?

• Does the patient have any other symptoms?

Based on the answers to these questions, the expert system would use its knowledge base and rules to arrive at a diagnosis. The benefits of this application of AI are:

• Enhanced diagnostic accuracy, early detection and prevention: AI systems can analyse complex medical data and recognise patterns that may be missed by human doctors, leading to more accurate diagnoses. AI systems can identify diseases at an earlier stage, which can lead to better outcomes and potentially save lives.

• Increased speed and efficiency, lowering healthcare costs: AI can process vast amounts of medical data quickly, providing rapid diagnostic suggestions and allowing healthcare professionals to focus on patient care. By streamlining the diagnostic process, AI can help reduce healthcare costs and make treatments more affordable.

• Consistency: AI systems can provide consistent diagnostic results, reducing the variability that can occur with different human practitioners.

• Accessibility: AI medical expert systems can be used in remote or underserved areas, improving access to quality healthcare for people who might otherwise not have access.

These benefits demonstrate the potential of AI to transform healthcare by supporting medical professionals and enhancing patient outcomes. However, it is important to recognise that AI technology is a tool to assist healthcare providers and not a replacement for their expertise and judgment. We will come back to this point again.

In the home, AI is being developed to take over routine chores and tasks, such as the humanoid robots by Sanctuary AI and Dyson, and Tesla's Optimus robot, which is designed to perform simple tasks like watering plants and carrying boxes.

The Vancouver-based firm Sanctuary AI is developing a humanoid robot called Phoenix which, when complete, will supposedly understand what we want, understand the way the world works and have the skills to carry out our commands.

The CEO says, however: "There is a long way to go from where we are today …“ before an AI robot is doing our laundry or cleaning the bathroom.

In the UK, Dyson is investing in AI and robotics aimed at household chores. Tesla is working on the Optimus humanoid robot. During the annual Tesla AI Day presentations, people were shown a video of Optimus performing simple tasks, such as watering plants, carrying boxes and lifting metal bars. The robots would be produced at scale, at a cost lower than $20,000 (£17,900), and be available in three to five years (according to the BBC, sourced from various articles in 2022 and 2023).

Given all of this information, can we say that AI is good or bad or indifferent? Please consider the idea that “people do good and bad things … technology (whether it is the cave-person’s club or the modern computer and AI) are our tools.”

Yes, this is a well-known philosophical question that has been raised many times, most notably in relation to the atomic bomb. Regardless of where you stand on the morel and ethical issue related to AI, it is important to remember that while an AI can write software that allows a computer to recognise a face, recognise speech, turn speech to text, talk with us, answer questions, create fake images, and the like, AI cannot do anything it is not programmed to do … it is software. It is a computer program.

The problem I see is that it will get to a point, if it has not already done so, where we can no longer predict the outcomes of an AI due to the number and speed of the complex interactions involved, but an AI it not intelligent. It cannot think, judge, handle exceptions and nuance or exhibit intelligence as a human does, it can only do what it is programmed to do.

For example, how long will you sit at a red light before going through it under the following conditions:

- at mid-day with many cars around?

- if you are on your way to the emergency department with your sick child in the car?

- at 2am, with no other cars or any people in sight?

An AI driven car, if programmed to stop at a red light with no contingencies or alternatives built in, will sit there until the end of time! It is not intelligent. It cannot think or judge or consider context.

As of now, only humans can think, judge and make appropriate decisions within context. Maybe there will come a time when a computer program can simulate human intelligence. I do not think it will ever duplicate human intelligence. Humans have multiple forms of intelligence.

Howard Gardner's popular “Theory of Multiple Intelligences”, suggests that intelligence is not a single general ability, but rather a set of distinct capabilities. According to Gardner and others, there are many types of intelligence, and these make us human and distinct from any type of artificial intelligence:

1. Linguistic Intelligence: The ability to use words effectively, both orally and in writing.

2. Logical-Mathematical Intelligence: The capacity for inductive and deductive www.culturalcybersecurity.com thinking and reasoning, as well as the use of numbers and abstract pattern recognition.

3. Spatial Intelligence: The ability to recognize and manipulate the patterns of wide space as well as more confined areas.

4. Bodily-Kinesthetic Intelligence: The use of one's whole body or parts of the body to solve problems or create products.

5. Musical Intelligence: The ability to produce and appreciate rhythm, pitch, and timbre.

6. Interpersonal Intelligence: The capacity to understand the intentions, motivations, and desires of other people and work effectively with others.

7. Intrapersonal Intelligence: The capacity to understand oneself, to have an effective working model of oneself, including one's own desires, fears, and capacities.

8. Naturalistic Intelligence: The ability to recognise and categorise plants, animals, and other aspects of the natural environment.

9. Existential Intelligence: The capacity to ponder deep questions about human existence.

These intelligences represent different domains of human potential and can be found to varying degrees within each individual and some of them, I guarantee you, will never be found in an AI pr in any non-organic machine or computer program.

By James Carlopio. Dr James Carlopio is Executive Director at Cultural Cyber Security. Dr Carlopio has worked on cultural and technology transformation projects for numerous Australian, European and US-based organisations. He as worked with organisations such as the United Nations (ACT/EMP) in Geneva and Zurich Switzerland, with Origin. and has published over three-dozen articles and five books on various socio-technical issues and has written a regular section for the Australian Financial Review BOSS magazine. Visit: www.culturalcybersecurity.com